Professionals from the fields of medicine, defense intelligence and cybersecurity shared insights during an artificial intelligence and cybersecurity panel at Augusta University’s Computer and Cyber Graduate Student Organization Fall Fest in late October.

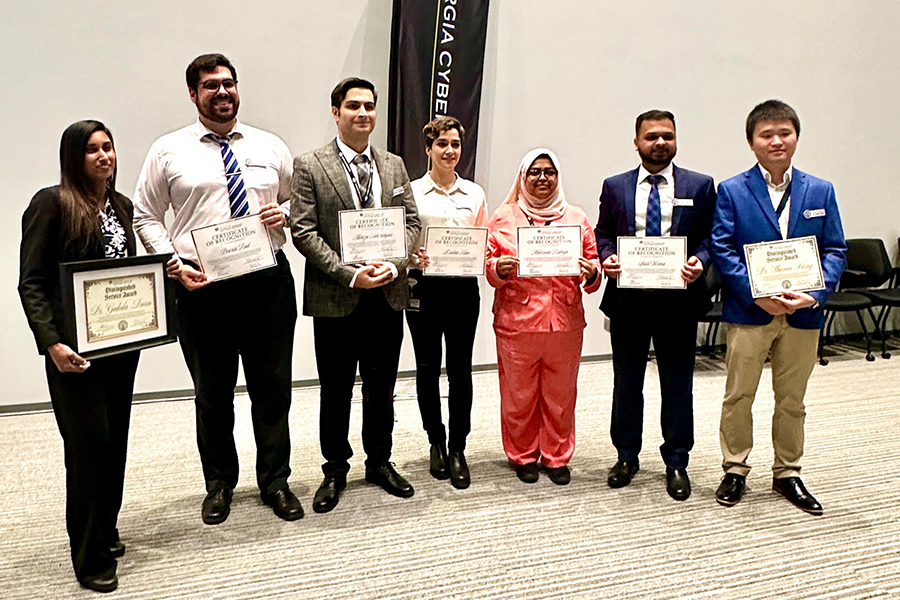

The event, hosted at the Georgia Cyber Innovation and Training Center, provided graduate students and professionals with a platform to explore the dynamic intersections of AI and cybersecurity while featuring cultural performances, graduate research presentations, a poster showcase, lab tours and engaging lightning talks led by industry specialists.

“This event is truly important for us,” said Alexander Schwarzmann, PhD, dean of the School of Computer and Cyber Sciences. “The strong turnout reflects how much events like this resonate with our students, and industry voices sharing real-world insights make the discussions even more impactful. The entire day was filled with dialogue between students eager to learn and professionals enthusiastic about sharing their knowledge.”

The highlight of the day was a diverse panel discussion featuring experts from fields in health care, defense intelligence, vehicle and transportation services and industrial manufacturing. The panelists shared their insights on how AI is reshaping their industries and the crucial role that cybersecurity plays in safeguarding innovations.

Jason Orlosky, PhD, director of SCCS’s senior design program, conducts research on topics of AI, augmented reality and visualization with a focus on enhancing interaction with virtual content.

“Our goal should be to improve understanding and transparency between nations, institutions and people,” said Orlosky. “Improving global education using intelligent agents or other means of instruction will improve mutual understanding between individuals of different cultures and backgrounds. At the same time, AI is quickly becoming embedded in our lives as a tool, and we need to ensure that we continue to progress intellectually without developing an overreliance on it.”

Building trust and feeling secure with AI technology comes down to transparency, appropriate use and understanding. Knowing how AI models are trained, what data is used and how they operate is also key to ensuring positive outcomes from the technology.

Craig Albert, PhD, professor of political science and graduate director of AU’s PhD Intelligence, Defense and Cybersecurity Policy and Master of Arts in Intelligence and Security Studies programs, contributed to the conversation on the escalating risks of data sharing, especially with the growing threat of deepfake technology.

“It’s extremely important to stay vigilant about the information we receive and share online,” Albert said. “With the ease of reading, sharing and posting, it’s becoming harder to tell fact from fiction, and we often have no idea how that information might be used.”

Albert also spoke about the rise of misinformation, disinformation and propaganda on social media and how it can influence public opinion, even escalating into information warfare.

The panel highlighted that hosting these discussions in an academic setting empowers students and faculty to engage with complex, real-world issues rather than shy away from them. As AI continues to evolve, bringing these conversations to the forefront allows people to recognize both the opportunities and challenges, especially around data privacy and security.

From a medical standpoint, Kim Capehart, PhD, associate dean of AU’s Dental College of Georgia, spoke about the slightly slower adoption of AI in health care due to critical concerns surrounding patient confidentiality.

“AI hasn’t been as readily embraced in medicine. If anything, we are still in the early stages of the digital journey because we’ve had to move carefully to protect patient privacy and rights,” Capehart said. “On one hand, it’s revolutionary. We can identify conditions earlier and create proactive, personalized treatment plans, but there are still concerns about data quality, cybersecurity risks and ethical implications.”

As AI takes on a larger role in health care, machine learning is streamlining time-intensive, high-volume tasks, allowing medical professionals to focus more on patient care. However, this growing reliance also heightens the need for effective regulation and responsible use, especially as AI advances in diagnosing, treating, preventing and potentially curing diseases.

“AI is quickly becoming embedded in our lives as a tool, and we need to ensure that we continue to progress intellectually without developing an overreliance on it.”

Jason Orlosky, PhD, director of SCCS’s Senior Design program

The surge in AI adoption across sectors like defense, industry manufacturing and health care underscores the increasing demand for cybersecurity. With this, the panelists issued a clear call to action: individuals and organizations must take preventative steps to protect digital privacy and security. They emphasized that staying informed and ready is essential to harnessing AI for meaningful impact.

As the relationship between humans and AI continues to evolve, the experts emphasized the importance of seeing AI as a partner rather than merely a tool – a shift that requires both intentionality and oversight to maintain.

AU’s complete panelist participation included:

- Mitchell Tatar – CJB Industries, Valdosta, Georgia

- Ernesto Cortez – Booz Allen Hamilton, Augusta, Georgia

- Craig Albert, PhD – Katherine Reese Pamplin College of Arts, Humanities, and Social Sciences, Augusta University, Augusta, Georgia

- Jason Orlosky, PhD – School of Computer & Cyber Sciences, Augusta University, Augusta, Georgia

- Kim Capehart, PhD – Dental College of Georgia, Augusta University, Augusta, Georgia

- Hanlin (Julia) Chen, PhD – Oak Ridge National Laboratory, Knoxville, Tennessee

Augusta University

Augusta University